-

Notifications

You must be signed in to change notification settings - Fork 0

Swap our metrics provider from statsd to otel-metrics + instrument our Fetcher threadpool #657

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

## Summary

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **New Features**

- Introduced a new query to retrieve purchase records with date range

filtering.

- Enhanced data retrieval by including additional contextual metadata

for improved insights.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

---------

Co-authored-by: Thomas Chow <[email protected]>

## Summary

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **New Features**

- Introduced dedicated testing workflows covering multiple system

components to enhance overall reliability.

- Added new test suites for various components to enhance testing

granularity.

- **Refactor**

- Streamlined code organization with improved package structures and

consolidated imports across test modules.

- **Chores**

- Upgraded automated testing configurations with optimized resource

settings for improved performance and stability.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

---------

Co-authored-by: Thomas Chow <[email protected]>

## Summary

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **Chores**

- Adjusted the test execution timeout setting from a longer duration to

900 seconds to ensure tests complete more promptly.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

Co-authored-by: Thomas Chow <[email protected]>

…nd not pushed to remote (#385) ## Summary I've been seeing that it's difficult to track what changes went into artifacts we push to etsy and canary. Especially when it comes to tracking performance regressions for spark jobs one day to the next. Adding a check to not allow any pushes to any customer artifacts if the branch is dirty. All changes need to at least be pushed to remote. And adding a metadata tag of the commit and branch ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Introduced consistency checks during the build and upload process to verify that local changes are committed and branches are in sync. - Enhanced artifact metadata now includes additional context about the code state at the time of upload. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

… top (#380) ## Summary While trying to read the updated beacon top topic we hit issues as the number of avro fields is greater than the Spark codegen limit default of 100. Thanks to this the wholestage codegen code is incorrect and we either end up with segfaults (unit tests) or garbled events (prod flink jobs). This PR bumps the limit to allow us to read beacon top (374 fields) as well as adds an assert in Catalyst util's whole stage code gen code to fail if we encounter this again in the future for a higher number of fields than our current bumped limit. ## Checklist - [X] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Enhanced data processing robustness with improved handling and early error detection for large schemas. - Refined SQL query formatting for clearer logical conditions. - **Tests** - Added a new validation for large schema deserialization. - Updated test definitions to improve structure and readability. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary

- Make the thrift gen python executable, use `py_binary` to support

python generally

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **Chores**

- Enhanced the build process for a key automation tool by streamlining

its execution and command handling, leading to improved overall build

reliability and performance.

- Transitioned the export mechanism of a Python script to a defined

executable binary target for better integration within the build system.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

Co-authored-by: Thomas Chow <[email protected]>

## Summary - Release Notes: https://spark.apache.org/releases/spark-release-3-5-4.html - https://issues.apache.org/jira/browse/SPARK-49791 is a good one for us. ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- av pr metadata This information is embedded by the av CLI when creating PRs to track the status of stacks when using Aviator. Please do not delete or edit this section of the PR. ``` {"parent":"main","parentHead":"","trunk":"main"} ``` --> <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Chores** - Upgraded underlying Apache Spark libraries to version 3.5.4, delivering enhanced performance, stability, and compatibility. This update improves processing efficiency and backend reliability, ensuring smoother and more secure data operations. End-users may notice more robust and responsive interactions as a result of these improvements, further enhancing overall system performance. <!-- end of auto-generated comment: release notes by coderabbit.ai --> Co-authored-by: Thomas Chow <[email protected]>

## Summary

- Even though I'm eager to get ahead here, let's not go too crazy and

accidentally shoot ourselves in the foot. Let's stay pinned to what our

clusters have (3.5.1) until those upgrade.

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **Chores**

- Updated core Spark libraries—impacting SQL, Hive, Streaming, and Avro

features—to version 3.5.1 to ensure enhanced stability and improved

integration across Spark-powered functionalities.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary Grant and I were chatting about the high number of hosts needed for the beacon top Flink jobs (24). This is because the topic parallelism is 96 and we squeeze 4 slots per TM (so 96 / 4 = 24 hosts). Given that folks often over provision Kafka topics in terms of partitions, going with a default of scaling down by 1/4th. Will look into wiring up Flink autoscaling as a follow up to not have this hardcoded. ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Refactor** - Optimized stream processing by refining the parallelism calculation. The system now applies a scaling factor to better adjust the number of active processing units, which may result in improved efficiency under certain conditions. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **New Features**

- Added interactive transformation controls for chart zooming,

resetting, and scroll modes.

- Introduced a detailed join configuration view and enhanced node graph

visualization with tooltips and collapsible details.

- Implemented a new component for displaying configuration properties

related to joins in a tabular format.

- Added new dependencies to enhance functionality and updated existing

ones for better performance.

- Introduced a new method for retrieving edges in the node graph and

improved lineage data handling.

- Added a new `StatusBar` component for job status representation.

- Introduced sample data structures for lineage and job tracking

responses.

- Implemented a new function for managing expandable row states in job

tracking tables.

- **UI Improvements**

- Refined chart layouts with updated padding and legend styling.

- Improved navigation headers and tabs for clearer page organization.

- Enhanced visual feedback for missing drift or distribution data.

- Allowed interaction with previously disabled elements for improved

user experience.

- **Bug Fixes**

- Improved error handling for data fetching and display logic.

- **Chores**

- Upgraded underlying dependencies and animations for smoother

performance.

- Removed obsolete files and functions related to job tracking data

management.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

---------

Co-authored-by: Sean Lynch <[email protected]>

Co-authored-by: Ken Morton <[email protected]>

## Summary ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Documentation** - Clarified command instructions and added informational notes to set expectations during initial builds. - **New Features** - Introduced new build options for modular construction of components, including dedicated commands for hub and cloud modules. - Added an automated script to streamline the frontend build process. - **Chores** - Updated container setup and startup processes to utilize revised deployment artifacts. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary

- trim down tableutils

- add iceberg runtime dependency to cloud_gcp

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **New Features**

- Added a runtime dependency to enhance Spark processing.

- Introduced a consolidated method for computing partition ranges.

- **Refactor**

- Streamlined import sections and simplified join analysis by removing

redundant permission checks.

- **Bug Fixes**

- Removed methods related to table permission checks, impacting access

control functionality.

- **Tests**

- Removed an outdated test for table permission verification.

- **Chores**

- Updated the project’s dependency configuration to include the new

Spark runtime artifact.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

---------

Co-authored-by: Thomas Chow <[email protected]>

## Summary Changed the backend code to only compute 3 percentiles (p5, p50, p95) for returning to the frontend. ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Bug Fixes** - Enhanced statistical data processing to consistently handle cases with missing values by using a robust placeholder, ensuring clearer downstream analytics. - Adjusted the percentile chart configuration so that the 95th, 50th, and 5th percentiles are accurately rendered, providing more reliable insights for users. - Relaxed the null ratio validation in summary data, allowing for a broader acceptance of null values, which may affect drift metric interpretations. - **New Features** - Introduced methods for converting percentile strings to index values and filtering percentiles based on user-defined requests, improving data handling and representation. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary Changes to support builds/tests with both scala 2.12 and 2.13 versions. By default we build against 2.12 version, pass "--config scala_2.13" option to "bazel build/test" to override it. ScalaFmt seems to be breaking for 2.13 using bazel rules_scala package, [fix](bazel-contrib/rules_scala#1631) is already deployed but a release with that change is not available yet, so temporarily disabled ScalaFmt checks for 2.13 will enable later once the fix is released. ## Checklist - [ ] Added Unit Tests - [x] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Enabled flexible Scala version selection (2.12 and 2.13) for smoother builds and enhanced compatibility. - Introduced a default Scala version constant and a repository rule for improved version management. - Added support for additional Scala 2.13 dependencies in the build configuration. - **Refactor and Improvements** - Streamlined build and dependency management for increased stability and performance. - Consolidated collection conversion utilities to boost reliability in tests and runtime processing. - Enhanced type safety and clarity in collection handling across various modules. - Improved handling of Scala collections and maps throughout the codebase for better type consistency and safety. - Updated method implementations to ensure explicit type conversions, enhancing clarity and preventing runtime errors. - Modified method signatures and internal logic to utilize `Seq` for improved type clarity and consistency. - Enhanced the `maven_artifact` function to accept an optional version parameter for better dependency management. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

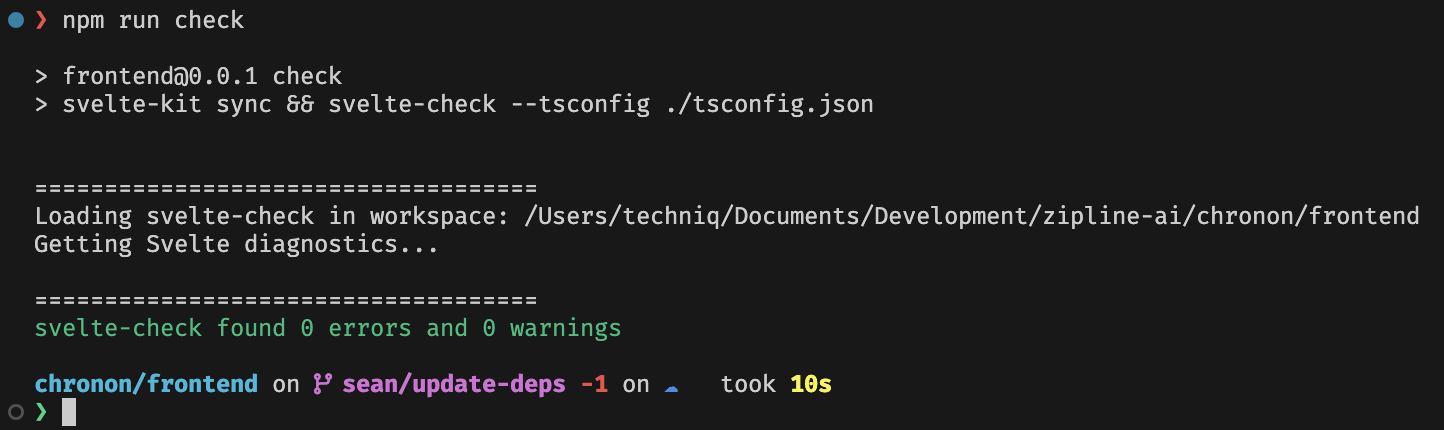

…velte (#399) ## Summary Updated all dependencies to latest (ignore Tailwind and bits-ui for now) so we don't lag too far behind. The LayerChart bump also fixes a BarChart tooltip [issue](https://github.com/techniq/layerchart/releases/tag/layerchart%400.99.2). Ran `npm run check`, `npm run lint`, and `npm run test:unit` locally (although CI will validate). Also kicked the app around locally to spot any regressions.    ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Chores** - Updated multiple dependencies and development tools to their latest versions for improved performance and compatibility. - **Refactor** - Enhanced UI components by enforcing stricter type definitions and consistency. - Improved the presentation and configuration of the `Inspect` component in the overview page. These updates streamline the build process and ensure a more reliable, consistent user interface without altering its overall functionality. <!-- end of auto-generated comment: release notes by coderabbit.ai --> <!-- av pr metadata This information is embedded by the av CLI when creating PRs to track the status of stacks when using Aviator. Please do not delete or edit this section of the PR. ``` {"parent":"main","parentHead":"","trunk":"main"} ``` --> --------- Co-authored-by: Sean Lynch <[email protected]>

## Summary

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **Chores**

- Updated automated testing triggers to run for changes on any branch,

ensuring broader test coverage for frontend updates.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

Co-authored-by: Sean Lynch <[email protected]>

## Summary ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Introduced new methods for retrieving lineage information for group by, model, and staging query configurations. - Enhanced configuration detail pages with improved navigation and interactive elements. - Added a new asynchronous function for handling data fetching based on configuration parameters. - Implemented a tooltip feature in the DateRangeSelector component for better user guidance. - Updated the PageHeader and TabsTrigger components to enhance routing and display context. - Added URLs for various logical types to improve navigation. - **Refactor** - Standardized lineage conversion processes and updated data references across views. - Improved reactive behavior and routing for configuration and observability pages. - Simplified layout structures for better scrolling behavior and UI clarity. - **Chores** - Removed deprecated endpoints, components, and legacy pages to streamline the application. <!-- end of auto-generated comment: release notes by coderabbit.ai --> --- - To see the specific tasks where the Asana app for GitHub is being used, see below: - https://app.asana.com/0/0/1209162816962776 - https://app.asana.com/0/0/1209438100557823 <!-- av pr metadata This information is embedded by the av CLI when creating PRs to track the status of stacks when using Aviator. Please do not delete or edit this section of the PR. ``` {"parent":"main","parentHead":"","trunk":"main"} ``` --> --------- Co-authored-by: Sean Lynch <[email protected]>

## Summary - #381 introduced the ability to configure a partition column at the node-level. This PR simply fixes a missed spot on the plumbing of the new StagingQuery attribute. ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Enhanced the query builder to support specifying a partition column, providing greater customization for query formation and partitioning. - **Improvements** - Improved handling of partition columns by introducing a fallback mechanism to ensure valid values are used when necessary. <!-- end of auto-generated comment: release notes by coderabbit.ai --> <!-- av pr metadata This information is embedded by the av CLI when creating PRs to track the status of stacks when using Aviator. Please do not delete or edit this section of the PR. ``` {"parent":"main","parentHead":"","trunk":"main"} ``` --> --------- Co-authored-by: Thomas Chow <[email protected]>

## Summary To add CI checks for making sure we are able to build and test all modules on both scala 2.12 and 2.13 versions. ## Checklist - [ ] Added Unit Tests - [x] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Chores** - Updated automated testing workflows to support Scala 2.12 and added new workflows for Scala 2.13, ensuring consistent testing for both Spark and non-Spark modules. - **Documentation** - Enhanced build instructions with updated commands for creating Uber Jars and new automation shortcuts to streamline code formatting, committing, and pushing changes. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary Added pinning support for both our maven and spark repositories so we don't have to resolve them during builds. Going forward whenever we make any updates to the artifacts in either maven or spark repositories, we would need to re-pin the changed repos using following commands and check-in the updated json files. ``` REPIN=1 bazel run @maven//:pin REPIN=1 bazel run @spark//:pin ``` ## Checklist - [ ] Added Unit Tests - [x] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Integrated enhanced repository management for Maven and Spark, providing improved dependency installation. - Added support for JSON configuration files for Maven and Spark installations. - **Chores** - Updated documentation to include instructions on pinning Maven artifacts and managing dependency versions effectively. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

A VSCode plugin for feature authoring that detects errors and uses data sampling in order to speed up the iteration cycle. The goal is to reduce the amount of memorizing commands, typing / clicking, waiting for clusters to be spun up, and jobs to finish. In this example, we have a complex expression operating on nested data. The eval button appears above Chronon types. When you click on the Eval button, it samples your data, runs your code and shows errors or transformed result within seconds.  ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [x] Integration tested (see above) - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Introduced a new Visual Studio Code extension that enhances Python development. - The extension displays an evaluation button alongside specific assignment statements in Python files, allowing users to trigger evaluation commands directly in the terminal. - Added a command to execute evaluation actions related to Zipline AI configurations. - **Documentation** - Added a new LICENSE file containing the MIT License text. - **Configuration** - Introduced new configuration files for TypeScript and Webpack to support the extension's development and build processes. - **Exclusions** - Updated `.gitignore` and added `.vscodeignore` to streamline version control and packaging processes. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary Moved scala dependencies to separate scala_2_12 and scala_2_13 repositories so we can load the right repo based on config instead of loading both. ## Checklist - [ ] Added Unit Tests - [x] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit ## Summary by CodeRabbit - **Chores** - Upgraded Scala dependencies to newer versions with updated verification, ensuring improved stability. - Removed outdated package references to streamline dependency management. - Introduced new repository configurations for Scala 2.12 and 2.13 to enhance dependency management. - Added `.gitignore` entry to exclude `node_modules` in the `authoring/vscode` path. - Created `LICENSE` file with MIT License text for the new extension. - **New Features** - Introduced a Visual Studio Code extension with a CodeLens provider for Python files, allowing users to evaluate variables directly in the editor. - **Refactor** - Updated dependency declarations to utilize a new method for handling Scala artifacts, improving consistency across the project. <!-- end of auto-generated comment: release notes by coderabbit.ai --> --------- Co-authored-by: Nikhil Simha <[email protected]>

## Summary Per the conversation on [Slack](https://zipline-2kh4520.slack.com/archives/C0880ECQ0EN/p1739982805433429). We don't currently have an example where a `GroupBy` takes in both a streaming and a batch source (mostly are a single source, and a few that are not are batch only), but the `.some()`/any logic should be [right](https://zipline-2kh4520.slack.com/archives/C0880ECQ0EN/p1739830806387109) Conf | Before | After --- | --- | --- Join |  |  GroupBy (no change) |  |  ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- av pr metadata This information is embedded by the av CLI when creating PRs to track the status of stacks when using Aviator. Please do not delete or edit this section of the PR. ``` {"parent":"main","parentHead":"","trunk":"main"} ``` --> --- - To see the specific tasks where the Asana app for GitHub is being used, see below: - https://app.asana.com/0/0/1209446053233127 <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Refactor** - Improved the application’s handling of streaming content for enhanced reliability and performance. The updates streamline the evaluation of live data and combined streams, contributing to a more consistent and responsive user experience. <!-- end of auto-generated comment: release notes by coderabbit.ai --> Co-authored-by: Sean Lynch <[email protected]>

## Summary Not sure the root cause of using `queryParameters()` within the drift/distributions route is not reactive when client-side routed (clicking on the Observability tab or clicking between Drift and Distributions) but works if you refresh the page. It is reactive within `ActionsButtons` and generally everywhere else. At some point I'll workup a minimal repo and report on https://github.com/paoloricciuti/sveltekit-search-params, but this approach is both simpler and resolves the issue (and still uses the same param config). My initial guess is it might be due to subscribing to the same params with 2 different instances on the same page, but not positive without investigating (and possibly not related). [Asana issue](https://app.asana.com/0/1208932365725930/1209459569820188) https://github.com/user-attachments/assets/3b825bae-484b-4dba-8c35-0e84b0b65ce0 ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- av pr metadata This information is embedded by the av CLI when creating PRs to track the status of stacks when using Aviator. Please do not delete or edit this section of the PR. ``` {"parent":"main","parentHead":"","trunk":"main"} ``` --> --- - To see the specific tasks where the Asana app for GitHub is being used, see below: - https://app.asana.com/0/0/1209459569820188 Co-authored-by: Sean Lynch <[email protected]>

## Summary Before | After --- | ---  |  ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update --- - To see the specific tasks where the Asana app for GitHub is being used, see below: - https://app.asana.com/0/0/1209438100557826 <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Enhanced the configuration overview by introducing intuitive, color-coded icons. Now, the statuses for online and production properties are displayed with clear visual indicators, offering immediate feedback on their state. - Updated the visual representation of the "False" state in the badge component for consistency and improved clarity. <!-- end of auto-generated comment: release notes by coderabbit.ai --> <!-- av pr metadata This information is embedded by the av CLI when creating PRs to track the status of stacks when using Aviator. Please do not delete or edit this section of the PR. ``` {"parent":"main","parentHead":"","trunk":"main"} ``` --> --------- Co-authored-by: Sean Lynch <[email protected]>

## Summary Adds AWS build and push commands to the distribution script. ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Introduced an automated quickstart process for GCP deployments. - Enhanced the build and upload tool with flexible command-line options, supporting artifact creation for both AWS and GCP environments. - Added a new script for running the Zipline quickstart on GCP. - **Refactor** - Updated the AWS quickstart process to ensure consistent execution. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

…FilePath and replacing `/` to `.` in MetaData names (#398) ## Summary ^^^ Tested on the etsy laptop. ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Bug Fixes** - Improved error handling to explicitly report when configuration values are missing. - **New Features** - Introduced standardized constants for various configuration types, ensuring consistent key naming. - **Refactor** - Unified metadata processing by using direct metadata names instead of file paths. - Enhanced type safety in configuration options for clearer and more reliable behavior. - **Tests** - Updated test cases and parameters to reflect the improved metadata and configuration handling. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

Reverts #373 Passing in options to push to only one customer is broken. <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Refactor** - Streamlined the deployment process to automatically build and upload artifacts exclusively to Google Cloud Platform. - Removed configuration options and handling for an alternative cloud provider, resulting in a simpler, more focused workflow. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary building join output schema should belong to metadata store - and also reduces the size of fetcher. ## Checklist - [ ] Added Unit Tests - [x] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Introduced an optimized caching mechanism for data join operations, resulting in improved performance and reliability. - Added new methods to facilitate the creation and management of join codecs. - **Bug Fixes** - Enhanced error handling for join codec operations, ensuring clearer context for failures. - **Documentation** - Improved code readability and clarity through updated comments and method signatures. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary Skeleton implementation for Agent service with job polling and status reporting happening at regular intervals which can be configured. A lot of place holder code which is going to change a lot in coming PR's so didn't add tests for now, will add them in coming PR's ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Introduced configuration management for agent components, including polling intervals and service endpoints. - Added abstractions and in-memory implementations for job execution and key-value storage services. - Implemented periodic job polling and status reporting handlers for orchestration agents. - Added Vert.x verticles to manage job polling and status reporting workflows. - **Improvements** - Enhanced job struct to use a detailed job info field. - Expanded job status types with an explicit "unknown" state and updated status codes. - **Dependency Updates** - Included additional Vert.x dependencies for URI template support. - Packaged resource files into the orchestration binary. - **Bug Fixes** - Updated job handling logic and tests to reflect changes in job ID access patterns. - **Tests** - Adjusted tests to accommodate the new job info structure. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary

- We need to bring back the v1 version of Datasource for spark bigquery

connector, since it supports partition pushdown. And alternative

project_id's. The catalog version in the spark bigquery connector does

not support that.

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

## Summary by CodeRabbit

- **New Features**

- Enhanced table reading capabilities with support for applying

partition filters and combining multiple predicates for more flexible

data queries.

- **Refactor**

- Improved internal handling of predicate filters and table loading

logic for more consistent and maintainable data access.

- Refined data filtering by explicitly incorporating partition column

information for more precise queries.

- **Chores**

- Updated script to ensure temporary files are cleaned up more reliably

during installation processes.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

---------

Co-authored-by: Thomas Chow <[email protected]>

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 5

🧹 Nitpick comments (3)

service_commons/src/main/java/ai/chronon/service/ChrononServiceLauncher.java (2)

27-33: Update class comment.Comment on lines 28-30 still refers to StatsD, should be updated to reflect OpenTelemetry usage.

92-94: Verify duration format.Ensure exportInterval is in ISO-8601 format (e.g., "PT15S") for Duration.parse().

cloud_gcp/src/main/scala/ai/chronon/integrations/cloud_gcp/BigTableKVStoreImpl.scala (1)

155-159: Repeatedcopy(...)per call is GC‑heavyCreating a new

Contextfor every dataset on every call allocates objects and populates the tag cache again.

Consider caching only once per dataset and re‑using the same instance.

📜 Review details

Configuration used: CodeRabbit UI

Review profile: CHILL

Plan: Pro (Legacy)

📒 Files selected for processing (15)

cloud_aws/src/main/scala/ai/chronon/integrations/aws/DynamoDBKVStoreImpl.scala(2 hunks)cloud_gcp/src/main/scala/ai/chronon/integrations/cloud_gcp/BigTableKVStoreImpl.scala(18 hunks)docker/fetcher/start.sh(1 hunks)online/src/main/java/ai/chronon/online/JavaFetcher.java(7 hunks)online/src/main/scala/ai/chronon/online/Api.scala(3 hunks)online/src/main/scala/ai/chronon/online/fetcher/FetchContext.scala(2 hunks)online/src/main/scala/ai/chronon/online/fetcher/Fetcher.scala(9 hunks)online/src/main/scala/ai/chronon/online/fetcher/FetcherCache.scala(3 hunks)online/src/main/scala/ai/chronon/online/metrics/Metrics.scala(6 hunks)online/src/main/scala/ai/chronon/online/metrics/MetricsReporter.scala(1 hunks)online/src/main/scala/ai/chronon/online/metrics/OtelMetricsReporter.scala(1 hunks)online/src/test/scala/ai/chronon/online/test/TagsTest.scala(2 hunks)service/src/main/java/ai/chronon/service/FetcherVerticle.java(3 hunks)service_commons/src/main/java/ai/chronon/service/ChrononServiceLauncher.java(3 hunks)service_commons/src/main/java/ai/chronon/service/OpenTelemetryProvider.java(1 hunks)

🚧 Files skipped from review as they are similar to previous changes (8)

- online/src/main/scala/ai/chronon/online/fetcher/FetchContext.scala

- online/src/test/scala/ai/chronon/online/test/TagsTest.scala

- service/src/main/java/ai/chronon/service/FetcherVerticle.java

- cloud_aws/src/main/scala/ai/chronon/integrations/aws/DynamoDBKVStoreImpl.scala

- online/src/main/scala/ai/chronon/online/fetcher/FetcherCache.scala

- online/src/main/scala/ai/chronon/online/Api.scala

- online/src/main/scala/ai/chronon/online/fetcher/Fetcher.scala

- online/src/main/java/ai/chronon/online/JavaFetcher.java

🧰 Additional context used

🧬 Code Graph Analysis (1)

online/src/main/scala/ai/chronon/online/metrics/OtelMetricsReporter.scala (1)

online/src/main/scala/ai/chronon/online/metrics/Metrics.scala (9)

Metrics(25-219)Context(89-132)Context(134-218)toTags(158-183)count(213-213)gauge(215-215)gauge(217-217)distribution(207-210)distribution(210-213)

⏰ Context from checks skipped due to timeout of 90000ms (35)

- GitHub Check: service_commons_tests

- GitHub Check: service_tests

- GitHub Check: cloud_gcp_tests

- GitHub Check: online_tests

- GitHub Check: cloud_aws_tests

- GitHub Check: streaming_tests

- GitHub Check: hub_tests

- GitHub Check: service_tests

- GitHub Check: join_tests

- GitHub Check: streaming_tests

- GitHub Check: flink_tests

- GitHub Check: online_tests

- GitHub Check: spark_tests

- GitHub Check: cloud_gcp_tests

- GitHub Check: groupby_tests

- GitHub Check: join_tests

- GitHub Check: hub_tests

- GitHub Check: analyzer_tests

- GitHub Check: cloud_aws_tests

- GitHub Check: groupby_tests

- GitHub Check: spark_tests

- GitHub Check: flink_tests

- GitHub Check: api_tests

- GitHub Check: fetcher_tests

- GitHub Check: batch_tests

- GitHub Check: aggregator_tests

- GitHub Check: aggregator_tests

- GitHub Check: analyzer_tests

- GitHub Check: orchestration_tests

- GitHub Check: fetcher_tests

- GitHub Check: api_tests

- GitHub Check: batch_tests

- GitHub Check: scala_compile_fmt_fix

- GitHub Check: orchestration_tests

- GitHub Check: enforce_triggered_workflows

🔇 Additional comments (6)

service_commons/src/main/java/ai/chronon/service/OpenTelemetryProvider.java (1)

11-21: Implementation looks clean.Thread-safe singleton design with Optional for safe instance access.

service_commons/src/main/java/ai/chronon/service/ChrononServiceLauncher.java (1)

65-107: Looks good, but consider caching the OpenTelemetry instance.The implementation correctly sets up OpenTelemetry for metrics reporting.

docker/fetcher/start.sh (1)

21-22: Log message update is appropriate.Script correctly checks for OTLP endpoint instead of StatsD host.

online/src/main/scala/ai/chronon/online/metrics/MetricsReporter.scala (2)

5-10: Clean provider interface.Generic type parameter enables flexibility for different client implementations.

12-24: Well-designed reporter trait.Interface supports all common metric types with consistent method signatures.

online/src/main/scala/ai/chronon/online/metrics/OtelMetricsReporter.scala (1)

62-66:DoubleGaugebuild call may not compile on latest OTel

meter.gaugeBuilder(metric).build()returns an observable builder that expects a callback, not a directset.

Verify against the OTel API inbuild.sbt; you may needofDoubles().buildWithCallback(...)or switch toDoubleUpDownCounter.

| OtlpConfig otlpConfig = key -> { | ||

| switch (key) { | ||

| case "otlp.url": | ||

| return exporterUrl; | ||

| case "otlp.step": | ||

| return exportInterval; | ||

| case "otlp.resourceAttributes": | ||

| return "service.name=" + serviceName; | ||

| default: | ||

| return null; | ||

| } | ||

| }; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

💡 Verification agent

🧩 Analysis chain

Verify Micrometer OTLP config keys.

Modern Micrometer versions (≥1.12) may expect different configuration keys. Consider confirming:

- case "otlp.url":

+ case "otlp.metrics.export.url":🌐 Web query:

What configuration keys does OtlpMeterRegistry use in Micrometer version 1.12+?

💡 Result:

Configuration Keys for OtlpMeterRegistry in Micrometer 1.12+

OtlpMeterRegistry uses a set of configuration keys to control behaviors such as endpoint selection, timeouts, resource attributes, and other telemetry settings. These keys are provided to the registry via the OtlpConfig interface, which supports property-based configuration—commonly via Spring Boot properties with the prefix management.otlp.metrics.export[1].

Common Configuration Keys

Below are the primary configuration keys available for OtlpMeterRegistry in Micrometer 1.12 and newer:

| Key | Purpose | Default/Example Value |

|---|---|---|

enabled |

Whether OTLP export is enabled | true |

endpoint |

The OTLP metrics endpoint (URL) | (none, must be set) |

step |

Frequency to report metrics (duration) | PT1M (1 minute) |

compression |

Compression type for payload (e.g., gzip) |

none |

headers |

Additional headers for requests (key-value pairs) | (none) |

aggregation-temporality |

Preferred temporality for cumulative or delta data | cumulative or delta |

resource-attributes |

Resource attributes attached to all metrics (comma-separated key-value pairs) | See below |

timeout |

Request timeout (duration) | 10s |

base-time-unit |

Base time unit for timers | milliseconds |

Resource Attributes:

- The following attributes are set by default:

telemetry.sdk.name:io.micrometertelemetry.sdk.language:javatelemetry.sdk.version: Micrometer version (e.g.,1.12.0)service.name:unknown_service(overridable byOTEL_SERVICE_NAMEenvironment variable)

- Custom resource attributes can be set using the

resource-attributeskey, or, if empty, loaded from theOTEL_RESOURCE_ATTRIBUTESenvironment variable[1].

Example: Spring Boot Property Mapping

When using Spring Boot, you can set these in your application.properties or application.yaml:

management.otlp.metrics.export.enabled=true

management.otlp.metrics.export.endpoint=https://otel-collector:4318/v1/metrics

management.otlp.metrics.export.step=30s

management.otlp.metrics.export.headers=Authorization=Bearer <token>

management.otlp.metrics.export.aggregation-temporality=delta

management.otlp.metrics.export.resource-attributes=service.name=my-service,env=prod

management.otlp.metrics.export.timeout=5sNotes

- The full set of supported keys is defined by the

OtlpConfiginterface. When implementing or overriding itsget(String key)method, properties are fetched by their keys, typically with the prefixmanagement.otlp.metrics.exportwhen using Spring Boot’s auto-configuration[1][8]. - Environmental variables such as

OTEL_RESOURCE_ATTRIBUTESandOTEL_SERVICE_NAMEcan influence resource attributes and take precedence over some configuration keys[1]. - The list above covers the most commonly used and documented keys as of Micrometer 1.12+; newer versions may introduce additional or changed keys.

For the latest list and advanced options, consult the official Micrometer OTLP documentation[1].

Citations:

- 1: https://docs.micrometer.io/micrometer/reference/implementations/otlp.html

- 2: https://github.com/micrometer-metrics/micrometer/wiki/1.13-Migration-Guide

- 3: https://docs.micrometer.io/micrometer/reference/implementations/prometheus.html

- 4: https://github.com/micrometer-metrics/micrometer/blob/main/implementations/micrometer-registry-otlp/src/main/java/io/micrometer/registry/otlp/OtlpMeterRegistry.java

- 5: https://docs.dynatrace.com/docs/ingest-from/extend-dynatrace/extend-metrics/ingestion-methods/micrometer

- 6: https://spring.io/blog/2018/03/16/micrometer-spring-boot-2-s-new-application-metrics-collector

- 7: https://docs.spring.io/spring-boot/reference/actuator/metrics.html

- 8: https://javadoc.io/static/io.micrometer/micrometer-registry-otlp/1.12.12/allclasses-index.html

- 9: https://www.javadoc.io/doc/io.micrometer/micrometer-core/1.0.3/io/micrometer/core/instrument/MeterRegistry.Config.html

- 10: https://mvnrepository.com/artifact/io.micrometer/micrometer-registry-otlp/1.12.5

Use correct Micrometer OTLP config keys

Micrometer 1.12+ expects the keys endpoint, step and resource-attributes, not otlp.*.

• File: service_commons/src/main/java/ai/chronon/service/ChrononServiceLauncher.java (lines 52–63)

Suggested diff:

- case "otlp.url":

+ case "endpoint":

return exporterUrl;

- case "otlp.step":

+ case "step":

return exportInterval;

- case "otlp.resourceAttributes":

- return "service.name=" + serviceName;

+ case "resource-attributes":

+ return "service.name=" + serviceName;📝 Committable suggestion

‼️ IMPORTANT

Carefully review the code before committing. Ensure that it accurately replaces the highlighted code, contains no missing lines, and has no issues with indentation. Thoroughly test & benchmark the code to ensure it meets the requirements.

| OtlpConfig otlpConfig = key -> { | |

| switch (key) { | |

| case "otlp.url": | |

| return exporterUrl; | |

| case "otlp.step": | |

| return exportInterval; | |

| case "otlp.resourceAttributes": | |

| return "service.name=" + serviceName; | |

| default: | |

| return null; | |

| } | |

| }; | |

| OtlpConfig otlpConfig = key -> { | |

| switch (key) { | |

| case "endpoint": | |

| return exporterUrl; | |

| case "step": | |

| return exportInterval; | |

| case "resource-attributes": | |

| return "service.name=" + serviceName; | |

| default: | |

| return null; | |

| } | |

| }; |

| protected val metricsContext: Metrics.Context = | ||

| Metrics.Context(Metrics.Environment.KVStore, client = metricsClient).withSuffix("bigtable") | ||

|

|

||

| protected val tableToContext = new TrieMap[String, Metrics.Context]() | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

🛠️ Refactor suggestion

Unbounded tableToContext risks memory leak

TrieMap keeps a distinct Context per BigTable id forever. If the service touches many ephemeral tables, the map will grow without limit.

Add TTL/LRU eviction or at least expose a cleanup hook.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

we are capped here as we have only 4 tables to contend with

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@piyush-zlai Understood! Since you're only working with 4 tables total, the memory usage for tableToContext will remain bounded and small. That's a perfectly reasonable approach given your specific use case.

✏️ Learnings added

Learnt from: piyush-zlai

PR: zipline-ai/chronon#657

File: cloud_gcp/src/main/scala/ai/chronon/integrations/cloud_gcp/BigTableKVStoreImpl.scala:93-97

Timestamp: 2025-04-21T15:10:40.802Z

Learning: The BigTableKVStoreImpl in the chronon codebase only interacts with 4 BigTable tables total, so unbounded caching in tableToContext is not a concern.

Note: Learnings are effective only in the context of similar code segments. To apply general coding guidelines, please configure review instructions. You can manage existing learnings in the app.

| import org.json4s.BuildInfo | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Wrong BuildInfo reference

org.json4s.BuildInfo.version is the json4s library version, not Chronon’s.

Use your project’s generated ai.chronon.BuildInfo (or similar) to avoid misleading instrumentation metadata.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

removed this for now. Will circle back and add a version later once we wire this into our bazel setup

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@piyush-zlai That's a sensible approach. Removing the potentially incorrect BuildInfo reference now and adding the correct version information later when you have proper Bazel integration will avoid any misleading instrumentation metadata. Looking forward to seeing the final implementation when you circle back to it.

| private val counters = new TrieMap[String, LongCounter]() | ||

| private val longGauges = new TrieMap[String, LongGauge]() | ||

| private val doubleGauges = new TrieMap[String, DoubleGauge]() | ||

| private val histograms = new TrieMap[String, LongHistogram]() | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

🛠️ Refactor suggestion

Instrument caches lack eviction

counters, gauges, and histograms grow with every new metric name.

If metric names are dynamic (e.g., per‑dataset), memory will balloon.

Consider bounded caches or pre‑register a fixed set of names.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the names are fixed based on what we've explicitly configured in the code.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@piyush-zlai Got it, thanks for clarifying. Since the metric names are fixed and explicitly configured in the code rather than dynamically generated, the TrieMaps won't grow unbounded and the current implementation without eviction is appropriate.

✏️ Learnings added

Learnt from: piyush-zlai

PR: zipline-ai/chronon#657

File: online/src/main/scala/ai/chronon/online/metrics/OtelMetricsReporter.scala:30-34

Timestamp: 2025-04-21T15:12:30.670Z

Learning: In the OtelMetricsReporter class, the instrument caches (counters, gauges, histograms) don't need eviction mechanisms because metric names are fixed and explicitly configured in the code, not dynamically generated.

Note: Learnings are effective only in the context of similar code segments. To apply general coding guidelines, please configure review instructions. You can manage existing learnings in the app.

| joinNames.foreach(addTag(Tag.Join, _)) | ||

|

|

||

| val groupByName = Option(groupBy).map(_.sanitize) | ||

| groupByName.foreach(addTag(Tag.GroupBy, _)) | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

🛠️ Refactor suggestion

Only last join tag survives

addTag(Tag.Join, _) inside a loop overwrites previous values; multi‑join contexts lose information.

Aggregating (joinNames.mkString(",")) before a single addTag preserves all joins while keeping one key.

…et run after click.option envvar (#675) ^^^ setting the `artifact_prefix` with the env var after the fact ## Summary ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update

## Summary

- Need the full table name here.

- Also test the filter predicate

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- This is an auto-generated comment: release notes by coderabbit.ai

-->

## Summary by CodeRabbit

- **Bug Fixes**

- Improved handling of source table names used in join operations,

ensuring more accurate or fully qualified table references.

- Adjusted partition filter handling for BigQuery integration to prevent

unwanted string modifications.

- **Tests**

- Added a new (currently ignored) test for verifying BigQuery partition

pushdown functionality.

<!-- end of auto-generated comment: release notes by coderabbit.ai -->

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":"main"}

```

-->

---------

Co-authored-by: Thomas Chow <[email protected]>

## Summary ^^^ ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [x] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Introduced a new "CHECK-PARTITIONS" validation step in the GCP quickstart script to verify data partitions before upload. - **Chores** - Improved internal handling of input arguments within the GCP runner for future use. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary Adding logic to dedupe in case we got multiple dependencies for a given node. Note: untested. ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Bug Fixes** - Improved handling of Airflow dependencies by ensuring duplicate entries are removed from dependency lists displayed in metadata. - Streamlined script execution by removing temporary output files and job ID extraction for cleaner command runs. <!-- end of auto-generated comment: release notes by coderabbit.ai --> --------- Co-authored-by: ezvz <[email protected]> Co-authored-by: David Han <[email protected]>

## Summary The job does not support multi-window and multiple labels ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Refactor** - Removed internal validation checks related to label join parts and aggregations, simplifying the label parts handling process. No changes to the user interface or exported functions. <!-- end of auto-generated comment: release notes by coderabbit.ai --> Co-authored-by: ezvz <[email protected]>

## Summary

- Creates a `catalog` module to replace the `format` one we had.

- Add a new bazel lib target for the `catalog` module

- Move TableUtils there, it should live alongside the formatprovider

stuff.

- Rename imports

- Followup is to remove the dependency on the main `spark` lib. This

requires decoupling with `Extensions`. Then we can drastically slim the

deps down in cloud gcp.

## Checklist

- [ ] Added Unit Tests

- [ ] Covered by existing CI

- [ ] Integration tested

- [ ] Documentation update

<!-- av pr metadata

This information is embedded by the av CLI when creating PRs to track

the status of stacks when using Aviator. Please do not delete or edit

this section of the PR.

```

{"parent":"main","parentHead":"","trunk":""}

```

-->

Co-authored-by: Thomas Chow <[email protected]>

## Summary To get us ready to being able to support production streaming workloads on Flink and Dataproc, we need to have metrics published. [OpsAgent](https://cloud.google.com/stackdriver/docs/solutions/agents/ops-agent) is the seeming paved path to reporting metrics from GCloud hosts and OpsAgent does have some support for some of [Flink's metrics reporters](https://nightlies.apache.org/flink/flink-docs-release-1.17/docs/deployment/metric_reporters/). Ended up going with prometheus as that is supported in our current version and OspAgent seems to have decent support for it. This PR can go independently of the infra PR that does the metrics scraping - zipline-ai/infrastructure#46 Tested manually and was able to confirm that metrics are making their way to cloud monitoring -  ## Checklist - [ ] Added Unit Tests - [ ] Covered by existing CI - [X] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **New Features** - Enabled Prometheus metrics reporting for Flink jobs, allowing improved monitoring and observability. - **Chores** - Added Prometheus metrics library as a dependency to the build configuration. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

## Summary Queueing up a change to bump our BT gax and fetcher thread pools back up now that we've cut some redundancies & David's been able to clear out some TPs in MMX. ## Checklist - [x] Added Unit Tests - [ ] Covered by existing CI - [ ] Integration tested - [ ] Documentation update <!-- This is an auto-generated comment: release notes by coderabbit.ai --> ## Summary by CodeRabbit - **Performance Improvements** - Increased thread pool sizes in relevant components to enhance concurrent processing capabilities. <!-- end of auto-generated comment: release notes by coderabbit.ai -->

78cdbab to

9ef2fa9

Compare

9ef2fa9 to

54c82bd

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actionable comments posted: 0

♻️ Duplicate comments (5)

online/src/main/scala/ai/chronon/online/metrics/Metrics.scala (5)

205-236:⚠️ Potential issue

toTagsnever consumed – metrics lose all contextAll reporter methods use empty maps instead of the context's tags.

- def increment(metric: String): Unit = Context.client.count(prefix(metric), 1, Map.empty) + def increment(metric: String): Unit = Context.client.count(prefix(metric), 1, toTags) - def distribution(metric: String, value: Long): Unit = - Context.client.distribution(prefix(metric), value, Map.empty) + def distribution(metric: String, value: Long): Unit = + Context.client.distribution(prefix(metric), value, toTags) - def count(metric: String, value: Long): Unit = Context.client.count(prefix(metric), value) + def count(metric: String, value: Long): Unit = Context.client.count(prefix(metric), value, toTags) - def gauge(metric: String, value: Long): Unit = Context.client.longGauge(prefix(metric), value) + def gauge(metric: String, value: Long): Unit = Context.client.longGauge(prefix(metric), value, toTags) - def gauge(metric: String, value: Double): Unit = Context.client.doubleGauge(prefix(metric), value) + def gauge(metric: String, value: Double): Unit = Context.client.doubleGauge(prefix(metric), value, toTags)

176-201:⚠️ Potential issueOnly last join tag survives

addTag(Tag.Join, _)inside a loop overwrites previous values; multi‑join contexts lose information.Fix by aggregating join names before a single addTag call:

- joinNames.foreach(addTag(Tag.Join, _)) + if (joinNames.nonEmpty) { + addTag(Tag.Join, joinNames.mkString(",")) + }

222-222:⚠️ Potential issueMerge with context tags

Exception tags should merge with context tags, not replace them.

- Context.client.count(prefix(Name.Exception), 1, Map(Metrics.Name.Exception -> exceptionSignature)) + Context.client.count(prefix(Name.Exception), 1, toTags + (Metrics.Name.Exception -> exceptionSignature))

228-229:⚠️ Potential issueMerge with context tags

Additional tags should merge with context tags, not replace them.

- def distribution(metric: String, value: Long, additionalTags: Map[String, String]): Unit = - Context.client.distribution(prefix(metric), value, additionalTags) + def distribution(metric: String, value: Long, additionalTags: Map[String, String]): Unit = + Context.client.distribution(prefix(metric), value, toTags ++ additionalTags)

207-208:⚠️ Potential issueMerge with context tags

Additional tags should merge with context tags, not replace them.

- def increment(metric: String, additionalTags: Map[String, String]): Unit = - Context.client.count(prefix(metric), 1, additionalTags) + def increment(metric: String, additionalTags: Map[String, String]): Unit = + Context.client.count(prefix(metric), 1, toTags ++ additionalTags)

🧹 Nitpick comments (3)

online/src/main/scala/ai/chronon/online/metrics/FlexibleExecutionContext.scala (1)

38-44: Queue capacity increased by 10xThe queue capacity was significantly increased from 1000 to 10000 items. While this prevents task rejection under high load, monitor memory usage.

online/src/main/scala/ai/chronon/online/metrics/InstrumentedThreadPoolExecutor.scala (1)

30-30: Consider constructor-injected metrics contextFixed context limits reusability. Consider accepting it as a constructor parameter.

-class InstrumentedThreadPoolExecutor(corePoolSize: Int, +class InstrumentedThreadPoolExecutor(corePoolSize: Int, maximumPoolSize: Int, keepAliveTime: Long, unit: TimeUnit, workQueue: BlockingQueue[Runnable], threadFactory: ThreadFactory, + metricsContext: Metrics.Context = Metrics.Context(Metrics.Environment.Fetcher).withSuffix("threadpool"), metricsIntervalSeconds: Int = 15) extends ThreadPoolExecutor( corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue, threadFactory ) { // Reporter for periodic metrics private val metricsReporter: ScheduledExecutorService = buildMetricsScheduledExecutor() -protected val metricsContext: Metrics.Context = Metrics.Context(Metrics.Environment.Fetcher).withSuffix("threadpool")online/src/main/scala/ai/chronon/online/metrics/Metrics.scala (1)

130-146: Add error handling for OtelMetricsReporter creationCurrent implementation will throw exceptions on initialization failures. Consider adding fallback.

private val client: MetricsReporter = { // Can disable metrics collection for local / dev environments val metricsEnabled: Boolean = System.getProperty(MetricsEnabled, "true").toBoolean val reporter: String = System.getProperty(MetricsReporter, "otel") - reporter.toLowerCase match { - case "otel" | "opentelemetry" => - if (metricsEnabled) { - val metricReader = OtelMetricsReporter.buildOtelMetricReader() - val openTelemetry = OtelMetricsReporter.buildOpenTelemetryClient(metricReader) - new OtelMetricsReporter(openTelemetry) - } else { - new OtelMetricsReporter(OpenTelemetry.noop()) - } - case _ => - throw new IllegalArgumentException(s"Unknown metrics reporter: $reporter. Only opentelemetry is supported.") + try { + reporter.toLowerCase match { + case "otel" | "opentelemetry" => + if (metricsEnabled) { + val metricReader = OtelMetricsReporter.buildOtelMetricReader() + val openTelemetry = OtelMetricsReporter.buildOpenTelemetryClient(metricReader) + new OtelMetricsReporter(openTelemetry) + } else { + new OtelMetricsReporter(OpenTelemetry.noop()) + } + case _ => + throw new IllegalArgumentException(s"Unknown metrics reporter: $reporter. Only opentelemetry is supported.") + } + } catch { + case e: Exception => + org.slf4j.LoggerFactory.getLogger(getClass).error(s"Failed to initialize metrics: ${e.getMessage}", e) + new OtelMetricsReporter(OpenTelemetry.noop()) + }

📜 Review details

Configuration used: CodeRabbit UI

Review profile: CHILL

Plan: Pro (Legacy)

📒 Files selected for processing (15)

cloud_gcp/src/main/scala/ai/chronon/integrations/cloud_gcp/BigTableKVStoreImpl.scala(17 hunks)docker/fetcher/start.sh(2 hunks)maven_install.json(37 hunks)online/BUILD.bazel(4 hunks)online/src/main/java/ai/chronon/online/JavaFetcher.java(1 hunks)online/src/main/scala/ai/chronon/online/fetcher/Fetcher.scala(2 hunks)online/src/main/scala/ai/chronon/online/metrics/FlexibleExecutionContext.scala(1 hunks)online/src/main/scala/ai/chronon/online/metrics/InstrumentedThreadPoolExecutor.scala(1 hunks)online/src/main/scala/ai/chronon/online/metrics/Metrics.scala(6 hunks)online/src/main/scala/ai/chronon/online/metrics/MetricsReporter.scala(1 hunks)online/src/main/scala/ai/chronon/online/metrics/OtelMetricsReporter.scala(1 hunks)online/src/test/scala/ai/chronon/online/test/TagsTest.scala(2 hunks)service_commons/BUILD.bazel(1 hunks)service_commons/src/main/java/ai/chronon/service/ChrononServiceLauncher.java(1 hunks)tools/build_rules/dependencies/maven_repository.bzl(2 hunks)

✅ Files skipped from review due to trivial changes (2)

- online/src/main/java/ai/chronon/online/JavaFetcher.java

- online/src/main/scala/ai/chronon/online/fetcher/Fetcher.scala

🚧 Files skipped from review as they are similar to previous changes (8)

- service_commons/BUILD.bazel

- online/src/test/scala/ai/chronon/online/test/TagsTest.scala

- online/BUILD.bazel

- docker/fetcher/start.sh

- service_commons/src/main/java/ai/chronon/service/ChrononServiceLauncher.java

- tools/build_rules/dependencies/maven_repository.bzl

- cloud_gcp/src/main/scala/ai/chronon/integrations/cloud_gcp/BigTableKVStoreImpl.scala

- online/src/main/scala/ai/chronon/online/metrics/OtelMetricsReporter.scala

🧰 Additional context used

🧬 Code Graph Analysis (1)

online/src/main/scala/ai/chronon/online/metrics/InstrumentedThreadPoolExecutor.scala (2)

online/src/main/scala/ai/chronon/online/metrics/Metrics.scala (3)

Metrics(26-237)Environment(27-43)withSuffix(165-165)online/src/main/scala/ai/chronon/online/metrics/OtelMetricsReporter.scala (1)

distribution(73-78)

⏰ Context from checks skipped due to timeout of 90000ms (21)

- GitHub Check: cloud_aws_tests

- GitHub Check: service_tests

- GitHub Check: service_tests

- GitHub Check: online_tests

- GitHub Check: service_commons_tests

- GitHub Check: hub_tests

- GitHub Check: hub_tests

- GitHub Check: online_tests

- GitHub Check: flink_tests

- GitHub Check: cloud_gcp_tests

- GitHub Check: cloud_gcp_tests

- GitHub Check: cloud_aws_tests

- GitHub Check: aggregator_tests

- GitHub Check: flink_tests

- GitHub Check: api_tests

- GitHub Check: aggregator_tests

- GitHub Check: orchestration_tests

- GitHub Check: orchestration_tests

- GitHub Check: scala_compile_fmt_fix

- GitHub Check: api_tests

- GitHub Check: enforce_triggered_workflows

🔇 Additional comments (40)

online/src/main/scala/ai/chronon/online/metrics/MetricsReporter.scala (1)

8-17: LGTM - Well-structured metrics interfaceClean, consistent method signatures with appropriate parameter defaults.

online/src/main/scala/ai/chronon/online/metrics/InstrumentedThreadPoolExecutor.scala (2)

66-89: LGTM - Well-implemented task instrumentationProperly captures and reports wait time, execution time, and total time metrics.

91-100: LGTM - Proper resource cleanupCorrectly shuts down metrics reporter along with thread pool.

maven_install.json (37)

3-4: Metadata bump

1197-1203: OkHttp artifact added

1778-1784: Micrometer OTLP registry added

2131-2137: OpenTelemetry proto added

2147-2150: OTel API version bumped

2159-2249: OTel SDK modules updated to 1.49.0

2257-2298: Prometheus metrics modules added

4172-4185: Kotlin stdlib JDK7/JDK8 added

6420-6426: OkHttp/Okio dependency mapping

6738-6741: Micrometer OTLP mapping

7007-7024: OTel dependency mappings updated

8476-8482: Kotlin stdlib mapping updated

11312-11329: OkHttp package-to-class mapping

12166-12168: Micrometer OTLP package mapping

12540-12549: OTel proto package mapping

12578-12615: OTel exporter package mappings

12628-12631: SDK autoconfigure SPI mapping

12678-12699: Prometheus package mappings

24457-24463: Sources list includes OkHttp/Okio

24623-24624: Micrometer OTLP in sources

24729-24731: OTel proto in sources

24739-24747: OTel exporter in sources

24765-24775: Prometheus in sources

25298-25301: Kotlin JDK variants in sources

25925-25930: OkHttp/Okio in sources

26091-26092: Micrometer OTLP in sources

26197-26199: OTel proto in sources

26207-26215: OTel exporter in sources

26233-26243: Prometheus in sources

26766-26769: Kotlin JDK sources updated

27393-27398: OkHttp/Okio sources updated

27559-27560: Micrometer OTLP in sources

27665-27667: OTel proto in sources

27675-27683: OTel exporter sources

27698-27712: Prometheus sources list

28234-28237: Kotlin JDK in stdlib sources

30256-30303: SPI registrations for OTel exporters finalized

Summary

PR to swap our metrics reporter from statsd to open telemetry metrics. We need otel to allow us to capture metrics in Etsy without the need of a prometheus statsd exporter sidecar that they've seen issues with occasionally. Otel in general is a popular metrics ingestion interface with a number of supported backends (e.g. prom / datadog / gcloud / aws cloudwatch). Wiring up Otel also enables us to set up traces and spans in the repo in the future.

Broad changes:

Checklist

Tested via docker container and a local instance of open telemetry:

Start up fetcher docker svc

And then otel:

We see:

Summary by CodeRabbit

Summary by CodeRabbit

New Features

Refactor

Chores