-

Notifications

You must be signed in to change notification settings - Fork 41

Prompt user with TLC options before running #207

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

This allows for the user-provided options to be persisted across sessions for a workspace. It does not persist options across workspaces however.

Provides fallback behavior in the case where a user opens a file in vscode without opening a folder (which would open a workspace).

|

@lemmy how has the TLC options prompt been working for you? Are there changes you think should be made? I made a few updates here to persist the user-entered TLC options to the workspace configurations by default (or global if a workspace is not open). When you get the chance, can you take a look and let me know what you think? |

|

Not to comment on the technical aspects, but I've been using this feature (earlier commit) for a few weeks now and it has significantly reduced the number of times I ran TLC with bogus parameters (because command-line parameters are store as extension settings). Unless there is a better place to show TLC parameters before model-checking, I suggest merging this PR asap. |

|

FWIW: I suggest not storing the settings at the workspace or global level; they are model-level settings. This extension has no corresponding concept (config \cup parameters \cup JVM tuning make up a Toolbox's model).

For now, the input box should just be pre-populated with a user's previous input. |

This reverts commit 6966c73. I misunderstood lemmy's comments

While this is true, I tend to pass the same few options to TLC. For my usage, it seems that the friction of typing in the options again when you (re-)open a window is more than that of clearing the options when you switch models. Is this not the case in your experience? |

|

My use of TLA+ might not be representative because I work more on TLA+ rather than with TLA+. That said, I would assume that the average user has one vscode workspace per spec, and, thus, would want parameters to be stored at the workspace level (if at all). |

|

@alygin, @hwayne, do you have any thoughts on if TLC options should be:

For reference, the clip below shows what the implementation currently looks like. By default it prompts with the options tlc_options.mp4 |

|

/cc @Calvin-L @will62794 (please ignore if you don't use the TLA+ vscode extension) |

I think such an option would be great. Persisting the options for a workspace seems fine, but I have no strong opinion on that. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This PR adds some user friction, but in most cases it just requires the user to press enter since the options are pre-populated from the previous run.

There's the "Run last model check again" command (handler) that should eliminate this friction for most cases by running the last check with no options dialog. Unfortunately, now it triggers the prompt.

Could you, please, make it always use the last options without asking the user? This will bring model checking back to one shortcut (or a single mouse click) distance from the user.

|

As for the user preferences destination, I think the "Workspace first" attitude is reasonable. But I'm afraid the extension behavior will be confusing in the following scenario:

I have no idea on how to handle that correctly. Leave it this way? Reset the options provided by the user? |

Sure thing. I just pushed a change to show the prompt for the I didn't realize that the "Evaluate expression" command also runs the model checker. I opted to not prompt for that command. Do you agree with this decision or do you think it should also prompt for "Evaluate expression"? |

|

Kudos, SonarCloud Quality Gate passed!

|

Off the top of my head, I can't think of parameters that I would want to pass when evaluating (constant) expressions. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good, thank you!

|

This PR interferes with the functionality to evaluate the selected expression from the editor's right-click context menu. |

@lemmy is this just in your fork, or also in the main repo? I added a showOptionsPrompt arg to the |

|

@klinvill Thanks, that might very well be it. Will check! Btw. I realized that the dialog could be used to support evaluating expressions in a spec that declares variables and/or constants, but lacks a model: ---------------------- MODULE ATD ---------------------

EXTENDS Naturals

CONSTANT N

ASSUME NIsPosNat == N \in Nat \ {0}

Node == 0 .. N-1

=============================================================================If a user tries to evaluate It would be nice if one could type |

|

@klinvill Can you suggest how to logically merge your changes with the ones related to the TLC debugger? Both of us changed APIs in |

Addresses #172.

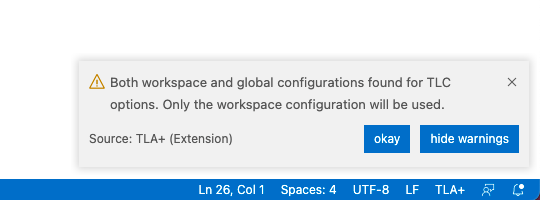

The idea behind this PR is to pop up an input box prompt with the TLC options when running the "Check model with TLC" command. This both shows the user what options TLC will run with and gives them a convenient place to change the options. This PR then saves the user-provided options to the workspace settings if a workspace is open, or to the global settings if not, allowing the same options to be prompted to the user for the next run, even across sessions.

This PR adds some user friction, but in most cases it just requires the user to press enter since the options are pre-populated from the previous run.

Outstanding questions/concerns I have with this PR are:

getTlcOptions()and config inbuildTlcOptions().getTlcOptions()would require UI interaction. This would mean integrating a framework like vscode-extension-tester or puppeteer/selenium. This would add complexity and a maintenance burden. Would it be worth it?