-

Notifications

You must be signed in to change notification settings - Fork 643

chore(storage): Adjust the tier_compaction limit to reduce the impact on ci #9534

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…nto li0k/storage_revert_compaction_config

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

revert PR is welcome

if we revert it, does it affect the performance of streaming jobs? If it may lead to large performance impact, I suggest we build two images, one with and one without PR, both based on latest |

…nto li0k/storage_revert_compaction_config

Codecov Report

@@ Coverage Diff @@

## main #9534 +/- ##

=======================================

Coverage 70.73% 70.73%

=======================================

Files 1233 1233

Lines 206688 206715 +27

=======================================

+ Hits 146199 146221 +22

- Misses 60489 60494 +5

Flags with carried forward coverage won't be shown. Click here to find out more.

... and 3 files with indirect coverage changes 📣 We’re building smart automated test selection to slash your CI/CD build times. Learn more |

…nto li0k/storage_revert_compaction_config

It might, so I try to make as few changes as possible, we need to pay more attention, The failure rate of e2e on main is so high that I prefer merging first to solve the problem |

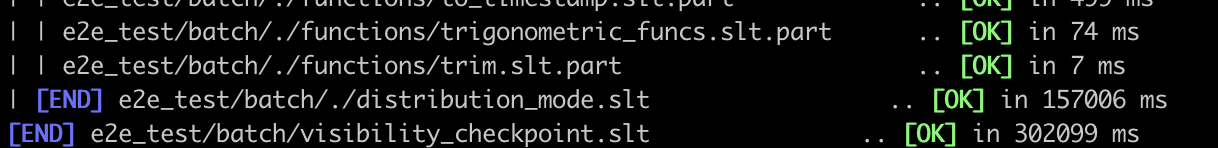

e2e test

The e2e test shows that after 8996 the e2e time consumption increases and the timeout causes the failure rate to increase. reasonI tried to reproduce the problem locally and observe the behavior of the system via grafana. In the current logic, the size of the sst file in trivial_move is limited. However, in the e2e test, the sst has fewer data and hits this condition, so trivial_move cannot be triggered. In the absence of trivial_move and the e2e test data are not overlap, the new picker cannot select enough levels, so it cannot trigger tier_compaction. Due to the small amount of data, base-level compaction cannot be triggered either. TLDR

Result

|

I hereby agree to the terms of the RisingWave Labs, Inc. Contributor License Agreement.

What's changed and what's your intention?

e2e tests found that a large number of compaction task

affected the batch query latency.It is suspected that the frequent compaction task causes more cache misses, which in turn increases the latency.I have observed that cg3 accumulates hundreds of sub_levels in the whole batch e2e test, which will affect our read performance. I have observed that cg3 accumulates hundreds of sub_levels in the whole batch e2e test, which will affect our read performance. Based on this information, I found a factor:

Changes to this pr

Checklist For Contributors

./risedev check(or alias,./risedev c)Checklist For Reviewers

Documentation

Click here for Documentation

Types of user-facing changes

Please keep the types that apply to your changes, and remove the others.

Release note