|

| 1 | +--- |

| 2 | +feature: watermark-operators-explained |

| 3 | +authors: |

| 4 | + - "Bugen Zhao" |

| 5 | +start_date: "2022/10/25" |

| 6 | +--- |

| 7 | + |

| 8 | +# Watermark Operators Explained |

| 9 | + |

| 10 | +In [RFC: The WatermarkFilter and StreamSort Operator](https://github.com/risingwavelabs/rfcs/pull/2), we showed how watermark will be used in RisingWave. This doc will introduce how the key operators should be implemented in our system. |

| 11 | + |

| 12 | + |

| 13 | + |

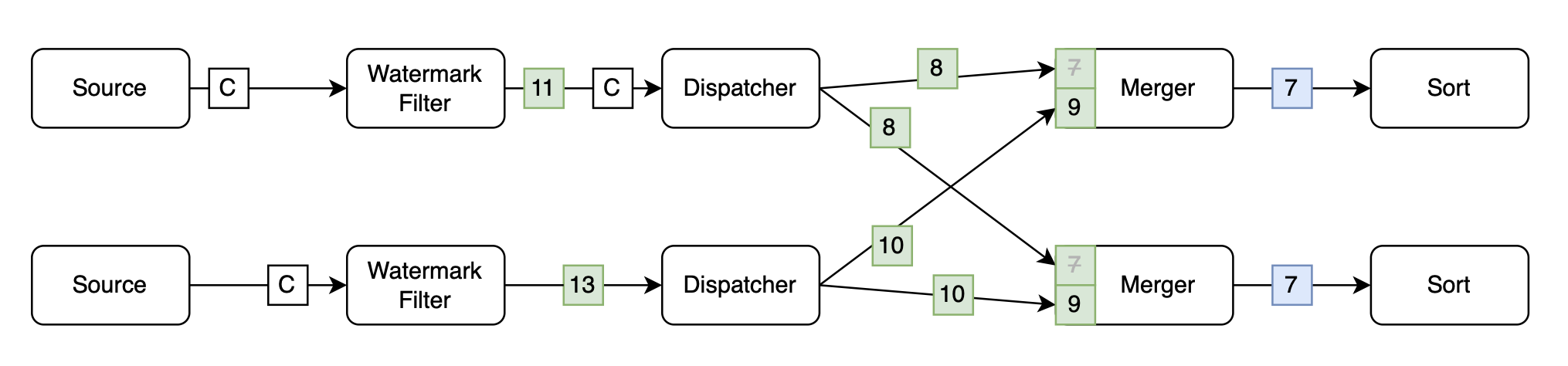

| 14 | +> Overview of the design. Image explained in detail below |

| 15 | +

|

| 16 | +Basically, the general idea is similar to the design of Flink like above, while one should note that there’re several major differences in our system: |

| 17 | + |

| 18 | +- Watermark can be generated everywhere, not associated with or to be a property of the sources. |

| 19 | +- We’re able to scale the stream graph online. Therefore, the state persistence and recovery should be considered. |

| 20 | + |

| 21 | +We’ll introduce 3 operators one by one in the following. |

| 22 | + |

| 23 | +## Watermark Filter (Stateful) |

| 24 | + |

| 25 | +The Watermark Filter maintains the timestamp-like column as the watermark while taking input records, filtering out the outdated records based on the watermark and a fixed **timeout**. Thus, the Watermark Filter is the source of truth of the `Watermark` messages, and it does not need to reorder or buffer the input chunks. |

| 26 | + |

| 27 | + |

| 28 | + |

| 29 | +There’re two notable facts: |

| 30 | + |

| 31 | +- The watermark filters run independently on each partition (parallel unit), so they’ll emit `Watermark` messages **based on the local input** like the specific splits assigned to the upstream source executor, which can be slightly different from others. |

| 32 | +- The semantics of watermark requires that, the timestamp of all records after the `Watermark(t)` message **must** not be less than `t`. |

| 33 | + |

| 34 | +Based on the above, consider a case of scale-in: the partition of one parallel unit (to be removed) with watermark `5` is split and merged into a parallel unit with watermark `3`. Then we must treat the watermark of this new partition as `max(3, 5) = 5` and filters out all records with timestamp less than `5`, including those from the previous partition. We can simplify the design and avoid buffering records based on this. |

| 35 | + |

| 36 | +This leads to the design: |

| 37 | + |

| 38 | +- **Schema:** only buffer the watermark messages |

| 39 | + `Vnode(..) [key], Watermark` |

| 40 | + |

| 41 | +- **On checkpoint** |

| 42 | + For all vnodes handled by this parallel unit, persist the same local watermark value. |

| 43 | + |

| 44 | +- **On fail-over** |

| 45 | + Get the state with any of the vnodes handled by this parallel unit, take the value as the local watermark. |

| 46 | + |

| 47 | +- **On scaling** |

| 48 | + Scan **all states**, take the **maximum** value as the watermark. |

| 49 | + |

| 50 | +- **On any initialization (the first barrier after creating / scaling)** |

| 51 | + (May) initialize, and always emit the `Watermark` message immediately. Explained in section [Exchange (Stateless)](#exchange-stateless). |

| 52 | + |

| 53 | +By always using the global-maximum value as the watermark after scaling, we ensure that no existing or new partition will emit a `Watermark` message with a smaller value after scaling. This is necessary for implementing the stateless Exchange. |

| 54 | + |

| 55 | +When implementing, if we don’t want to recognize fail-over against scaling, we may always take the global maximum. |

| 56 | + |

| 57 | +For source operators, the `Vnode` here makes less sense and what we really want is the Split ID. However, we have no “external distribution” support currently: we may only treat the vnode as the **division** assigned by the meta service and should not care about the value itself. |

| 58 | + |

| 59 | +## Exchange (Stateless) |

| 60 | + |

| 61 | +After the Watermark Filter per parallel unit, we need to handle the `Watermark` message across the Exchange. The basic idea is very similar to Flink. We show that, there will be no significant changes on the Exchange and it will still be **stateless** based on the design of the Watermark Filter above, with support for fail-over and scaling naturally. |

| 62 | + |

| 63 | + |

| 64 | + |

| 65 | +### Dispatcher |

| 66 | + |

| 67 | +- **On `Watermark`** |

| 68 | + Broadcast the message to all outputs, like `Barrier`. |

| 69 | + |

| 70 | +### Merger |

| 71 | + |

| 72 | +There will be multiple inputs (upstreams) for the Merger. As the watermark indicates that there’s no records with a smaller timestamp from now, the Merger should always **take the minimum watermark** from all inputs. |

| 73 | + |

| 74 | +- **On any initialization** |

| 75 | + As the Watermark Filter always emit a `Watermark` message this time, we’ll receive watermarks from all inputs now. |

| 76 | + |

| 77 | +- **On `Watermark`** |

| 78 | + Buffer the message to a in-memory per-input queue. |

| 79 | + **Only if** all queues are non-empty, we’re sure that which `Watermark` the minimum of all inputs is now, and we can emit it to the downstream. After that, clean-up all the `Watermark` messages with the same value as the emitted one. |

| 80 | + |

| 81 | +- **On `Chunk`** |

| 82 | + Forward the chunk immediately, regardless of the current watermark. Note that deferring a `Watermark` message after a chunk doesn’t break the correctness. |

| 83 | + |

| 84 | +- **On `Barrier`** |

| 85 | + Align the barrier like before. |

| 86 | + |

| 87 | +- **On upstream scaling** |

| 88 | + Initialize an empty queue for newly registered upstream. |

| 89 | + As we’ve ensured the watermark emitted right after scaling must be the global-maximum, it must be **not smaller than** the watermark emitted by the Merger before the scaling. So the monotonicity of `Watermark` messages is still held. |

| 90 | + |

| 91 | +- **On current fragment scaling** |

| 92 | + The Merger on the new partition will receive watermarks from all inputs immediately, and reach the global watermark. |

| 93 | + |

| 94 | +Right before aligning all barriers, the mergers in all (downstream) partitions will finish receiving **the same set** of `Watermark` messages from all inputs during this epoch. It’s easy to see that the algorithm above is deterministic, so a same watermark value is aligned in all partitions, that is, the watermark after the Exchange will become a **global watermark** (marked as blue). This is a must-have property for the Sort operator. |

| 95 | + |

| 96 | +~~We also have Barrier Aligner for those operators with multiple upstreams. The behavior should be similar and also simpler, compared to the Merger.~~ |

| 97 | + |

| 98 | +## Sort (Stateful) |

| 99 | + |

| 100 | +It’s impossible to sort an infinite stream basically, so we’re not able to benefit from the order property in streaming, like the Sort Aggregation and Window Functions. Luckily, with watermark, we cut the stream into finite parts and sort them individually when watermark bumps, so the stream can be transformed to be ordered and append-only. We split this responsibility to a separate operator named Sort, which must be stateful as it must buffer the records until watermark bumps. |

| 101 | + |

| 102 | + |

| 103 | + |

| 104 | +Every record in the output can pair with a watermark as it’s ordered, but we may still omit some watermarks for performance. |

| 105 | + |

| 106 | +To make it correct and simple, a Sort operator **requires a global watermark** emitted from the Exchange. Thus, it cannot be directly put after the Watermark Filter in the same fragment. We may need to manually insert an Exchange for this. |

| 107 | + |

| 108 | +The behavior of Sort operator: |

| 109 | + |

| 110 | +- **Schema:** only buffer the records |

| 111 | + `(Timestamp, Stream Key) [order key], Record` distributed by the consistent hash |

| 112 | + |

| 113 | +- **On `Chunk`** |

| 114 | + Buffer the records to a in-memory B-Tree. No need to operate on the storage memory table. |

| 115 | + |

| 116 | +- **On checkpoint** |

| 117 | + Persist all in-memory records to the state store to make them consistent. |

| 118 | + |

| 119 | +- **On any initialization** |

| 120 | + Fetch all records from the state store to the in-memory buffer. |

| 121 | + |

| 122 | +- **On `Watermark`** |

| 123 | + Drain the range of `..watermark` for both in-memory buffer and the state store (with `RangeDelete` operation), yield them in order. Then propagate the `Watermark`. |

| 124 | + No need to maintain the watermark, both in memory and in state store. |

| 125 | + |

| 126 | +- **On scaling** |

| 127 | + Reset the state table with the new partition info. |

| 128 | + The scaling only occur after the checkpoint, so we **must have aligned** the global watermark. Even if the Watermark Filter is also scaled, the watermark emitted won’t decrease. Therefore, merging records from the buffer of other partitions won’t break the order of the output. |

| 129 | + |

| 130 | +Furthermore, the logic of sorting can also be embedded to existing stateful operators to reduce unnecessary updates. For example, if one want to “freeze” the aggregation result of a window when it’s still not closed, there’s no need to maintain this aggregation group any more. |

0 commit comments