-

Notifications

You must be signed in to change notification settings - Fork 84

Plenty of workers suspended for several days. #331

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

config is fine afaik. Scale-in protection being enabled is correct, the plugin will enable scale in protection if not set because the plugin wants to control which instances are terminated. Without scale in protection an instance that is not idle might be terminated when the target capacity is adjusted. The instances being in suspended state makes me think there is an issue with setting up the jenkins agents on the instances. I think I have seen this happen in the past when ssh credentials are misconfigured but it's been a while and my memory is poor Can you provide the logs for this event? |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. If you want this issue to never become stale, please ask a maintainer to apply the "stalebot-ignore" label. |

|

BTW can we remove |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. If you want this issue to never become stale, please ask a maintainer to apply the "stalebot-ignore" label. |

|

This issue was closed because it has become stale with no activity. |

Issue Details

Not sure if related to: #322, thus creating a separate issue.

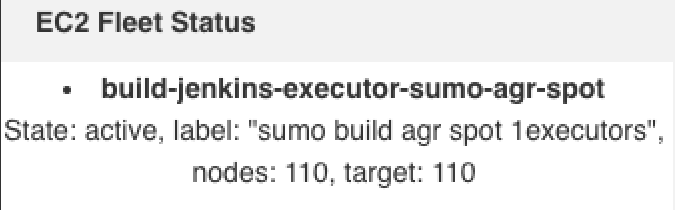

Recently we noticed a very long jobs queue on jenkins. It turned out all ec2 fleets were maxed, but plenty of instances were in "suspended" and not disconnected from jenkins. EC2 instances themselves were fine on aws side.

Even when I killed suspended instances using script, the new ones did some work but some of them eventually became suspended after some time too. IDLE time was not respected for those (we've got idle timeout configured to 3 minutes, some instances were connected for several days).

Describe the bug

Example observation:

A lot of builds waiting for the an agent:

And we can see that maximum number of agents for this label is already online:

Still I see a lot of workers empty - with no job assisgned.

I see some of them with (suspended) text - in the view below all with red cross (screenshot from:

https://<jenkins url>/computer/):Vast majority of nodes with red-cross meaning "suspended".

One of those instances:

So this one was alive for several hours and it hasn't even built a single job. Yet plenty of jobs are waiting tens of minutes for this label to become available.

At this point we had to turn off

ec2-fleet-pluginplugin and go back toec2-plugin.After we switched all the work to workers managed by

ec2-plugin, the situation stabilised.However we would love to go back to using this plugin (

ec2-fleet-plugin), because it's better with handling spot workers and using multi-instance-type.To Reproduce

I don't know. It just happened for all of our labels using this plugin.

Environment Details

Plugin Version?

2.5.0

Jenkins Version?

2.336

Spot Fleet or ASG?

ASG

Label based fleet?

No

Linux or Windows?

Linux

EC2Fleet Configuration as Code

Is this config OK?

Anything else unique about your setup?

Not sure if it's important, but our ASGs have:

The text was updated successfully, but these errors were encountered: